Today, I finally got the house to myself for long enough to take the home network down and swap our Verizon FiOS router out with a new Ubiquiti Unifi Cloud Gateway Ultra. I had spent a good while researching how to do this, and a week or so ago, I wrote out a step-by-step plan. As with everything in life, it didn’t go quite according to script, but overall, it went smoothly. Over the next few days, we’ll see if anything needs adjusting, but for now, the network is back up, connected, and ostensibly working fine. Here’s how it actually went down.

- I gathered everything noted in step 1 of last week’s list: MAC addresses for static leases, local DNS names, UI device SSH credentials, Ethernet adapter and cable for laptop. I also downloaded the Unifi app for my phone, and made sure I had access to my ui.com login credentials. Of these, the only things that proved essential were the Ethernet adapter/cable and my ui.com credentials. In particular, I did not need the phone app (read on).

- I created a full backup of my old Docker-based Unifi controller. It was about 13M.

- My original list included a step to remove the FiOS router from the Unifi controller’s device list before creating a backup of the settings. This was not necessary, or even possible, as it turns out that the Unifi controller doesn’t treat third-party routers as managed network devices. Therefore, there was no device to remove in the first place.

- I created a settings-only backup of the old controller, which was only 25K. However, I ended up not using it.

- I powered the FiOS gateway down and unplugged it. I did not do anything special to release its DHCP lease. I ended up having to briefly reconnect it to identify the cable running to the ONT (I have a bunch of disconnected CAT-6 cables in my wiring closet). Lesson learned: mark the cable somehow before disconnecting it and walking away. 😀

- I powered UCG-Ultra up and connected the ONT cable to its WAN port. My original list had me connecting the Ethernet first, but the setup guide says to connect the power first. In reality, I suspect it doesn’t matter much.

- The display on the UCG lit up with the Unifi logo, then a progress meter appeared at the bottom, and after a few minutes, it displayed a message that it was ready to configure and reachable at IP 192.168.1.1.

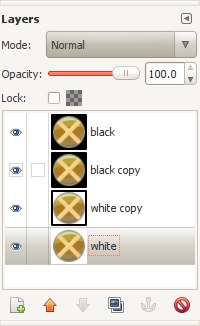

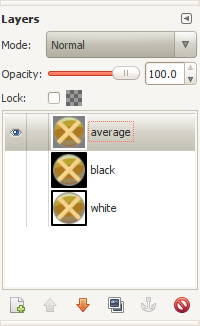

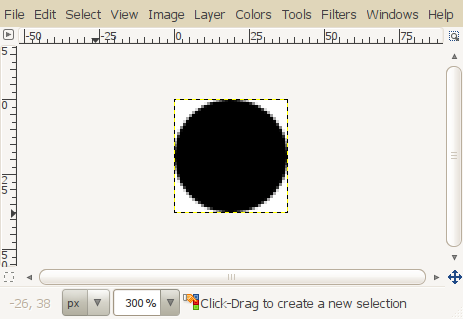

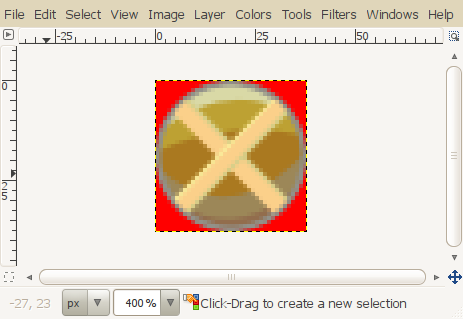

- This is where I thought I needed to connect with the mobile app over bluetooth, but it turns out that’s not necessary. The initial setup can be done over Ethernet using a laptop connected to one of the UCG’s LAN ports. All I needed to do was connect and point the laptop to http://192.168.1.1. Initially, I didn’t think it was working, as all I saw was a black screen. It turned out that it either doesn’t like Firefox, or doesn’t like one of my Firefox extensions or settings. When I tried with Chrome, it brought up a splash screen and let me proceed to configuration. I chose the option to restore from a backup, which prompted me to log in with my ui.com credentials, and then dumped me into the UCG’s web interface.

- The setup updated the UCG’s firmware/controller to version 8.2.93, which is the latest version as of this writing, and also the same version that I was running on the old Docker controller. I had this listed as a separate step, but it all happened automatically. It’s worth noting that during the upgrade, it displayed a screen saying it would take “about 5 minutes”, but seemed to stay there indefinitely. After 10 or 15 minutes, I tried re-connecting, and found that it had completed.

- At some point during this whole process, external Internet connectivity started working on my laptop. I can’t remember quite when, but I’m pretty sure it was before I restored the backup from the old controller. I suspect it was right after I “adopted” the UCG to my Unifi account. Initially, my Firefox browser displayed a “captive portal” banner similar to what I’m used to seeing on public guest WiFi portals.

- I restored the full backup from the old controller, which took a couple of minutes, and required a restart of the UCG. Again, the web browser experience through the restart wasn’t the smoothest, but it came back up just fine after a couple of minutes.

- At this point, I didn’t have any of the downstream network equipment connected to the UCG. I had planned to manually add static DHCP leases for the devices that needed them, but this wasn’t possible — after the restore, the UCG already “knew” about the device I tried to add, and told me that the MAC address I was trying to add already existed. I couldn’t find a way to go in and mark the reserved lease with the device disconnected. So, I just moved on:

- I connected my downstream gear to the LAN ports on the UCG, and after a few minutes, everything was working and had external connectivity, including the WiFi, with the same IP addresses they had before I swapped the router out. I’m not sure what will happen with regards to the DHCP leases, but I’m assuming the router will just treat them as new leases. The UCG’s default DHCP lease lifetime is 86400 seconds (24 hours). At this point, I was also able to go in to the devices that needed static IPs and mark them as “Fixed IP Address” (accessible by selecting the device and then clicking the settings icon). I assume that will do what I need.

- Next up was to go to the old controller and override the set-inform URL for the Unifi gear, so it will all start talking to the new controller. However, to my bemusement, I found that everything had already moved over without my doing anything. I thought maybe this was a new “feature” or something, but it turns out that it happened completely by accident. I logged into one of my APs via SSH and took a look at the log file. The original inform address was configured with a local DNS name (vs an IP address), and the DNS name was in turn configured into the old FiOS router. When I took the old router offline, the devices could no longer resolve the DNS name. After several unsuccessful retries, they eventually fell back on 192.168.1.1, which is where I wanted them anyhow — a happy coincidence.

Still to do:

- Factory-reset the old FiOS router in preparation to return it to Verizon. I’m hoping this can be done via a physical button on the router. If not, I’ll need to somehow hook it up to an isolated network so I can connect to the web interface.

- Figure out a local DNS strategy. I want to eventually route all of our DNS traffic through a Pi-hole, but I’m not sure if I want to manage local DNS names there, or on the UCG, and I’m not sure if I want the UCG in front of the Pi-hole, or vice versa. The FiOS router didn’t allow me to change the DNS server(s) it handed out via DHCP, so some of these configurations wouldn’t have been possible previously. I’ll have to think about this a bit.

- Finally cancel our landline phone. I think I can get a 1G/1G FiOS connection for less than I’m paying for 512/512 FiOS + an essentially useless landline.